To accompany my series of articles investigating storing and retrieving the digits of PI from distributed storage, I will be posting relevant/supporting stuff of a more technical nature on this page, to avoid bloating the articles.

PART 1 – Link

Part 1 investigates obtaining the digits from the pi.delivery website and briefly looks at the efficiency of how they can be stored.

My server / Disk setup

At (currently) 50 Trillion Digits, at least 20TB+ of storage is required just to store the compressed digits, but if I am to split/recode these into smaller files for distribution, I will probably require at least 2-3x this amount of space.

It so happens that the only server I have with this sort of storage has 10x16TB HDDs which I have configured with Software RAID 6. So this effectively gives me the capacity of 8 disks with redundancy (party) of 2. So that’s 8*16TB = 128 TiB raw unformatted storage. As you can see, by the time it is formatted and converted to TB instead of decimal aligned TiB, it gives me 116TB which should still be more than enough.

# df -h /cacheraid Filesystem Size Used Avail Use% Mounted on /dev/bcache0 116T 38T 73T 35% /cacheraid

The reason it is set up as cacheraid is that I am currently exploring bcache solution which caches smaller files on SSD but since these files are gonna be large it wont make any difference at all.

Calculating Efficiency of Storage

You may have noticed my ‘efficiency’ ratings of the different file format storage methods. Here is my explanation of these;

There are 8 Bits in a Byte of storage, so each Byte can store any value from 0-255 (0-FF hexadecimal). As stated, the most efficient way to store the data would be in Binary format as it is calculated, but since its not possible to just convert a subsection of digits from one number base to another (unless one is multiple of another), so we need to store the decimal digits themselves.

This efficiency is calculated as number of decimal digits / log (256), 256 being the number of different values that can be stored in a Byte.

To store 1 decimal place (0-9) in each Byte (0-255) gives an inefficiency of 41.5%. This would be incredibly wasteful as discussed.

BCD stores 2 decimal places in each byte so is double the efficiency of single digits (83%) and has other benefits mentioned.

The default method of y-cruncher is 19 digits per 64-bit (8 Byte) chunk which is 98.62% efficient.

I am choosing 12 digits per 40-bit (5 Bytes) chunk which is 99.66% efficient.

(The only combination I could find more efficient than this without getting ridiculous is 77 digits per 256-bit (32-Byte) chunk which would give nearly a 99.92% efficiency. However as this exceeds the standard 64-Bit maths operations, the extra complication is not worth it).

PART 2 – WIP

Getting the files

So I downloaded all the files in turn with the following batch command:-

n=0 ; while [ $n -le 49 ] ; do wget https://storage.googleapis.com/pi50t/Pi%20-%20Dec%20-%20Chudnovsky/Pi%20-%20Dec%20-%20Chudnovsky%20-%20$n.ycd ; n=$(( $n + 1 )) ; done

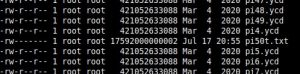

And I renamed them to simpler filenames

n=0 ; while [ $n -le 49 ] ; do mv "Pi%20-%20Dec%20-%20Chudnovsky%20-%20$n.ycd" pi$n.ycd ; n=$(( $n + 1 )) ; done

Converting the files

Then I tried converting all the chunks into ASCIi format using the built-in functionality of y-cruncher. Unfortunately, it bombed out after 17 trillion digits. I neglected to note the exact error, but that is more than enough digits to work with for now anyway.